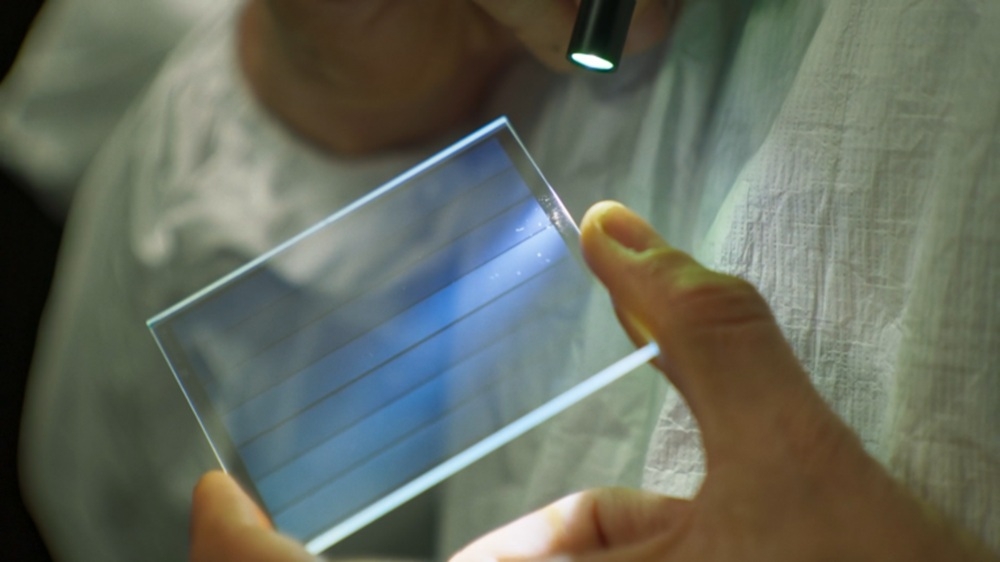

PARIS, April 2 — Several world champion bridge players had to accept defeat at the hands of an artificial intelligence system.

A feat never previously achieved. The victories mark an important step in the development of AI, because of its use of ‘white box’ AI, which acquires skills in a more human way, necessary to win at bridge compared to other strategy games such as chess.

Until now, to demonstrate the potential of artificial intelligence, humans were pitted against machines.

The AI trained itself by playing billions of games, in a process known as “deep learning,” and faced a human adversary, the champion in the category.

A technique that works perfectly for winning against top chess, checkers or go players. But not bridge, because this is a card game that requires more communication skills.

“NooK,” a next-generation artificial intelligence, was trained for this very purpose. It managed to beat eight world champion bridge players during a tournament taking place at the end of March in Paris, organised by French company NukkAI, NooK’s trainer.

One reason why bridge is so challenging is that it incorporates features that are not yet well understood by various forms of artificial intelligence.

This card game demands that players work with incomplete information, and they must react to the behaviour of other players at the table.

These kinds of skills are difficult for a machine to achieve. That is until NooK. At the Nukkai Challenge bridge tournament in Paris, the machine won 67 of the 80 rounds played, for a win rate of 83 per cent.

The concept of explainable artificial intelligence

But how can this feat be explained? How did NooK manage to acquire skills that are more human-like than technological? It all comes down to the concept of explainable artificial intelligence.

One of the main pitfalls of ‘black box’ artificial intelligence, used for chess, for example, is that its decisions are enigmatic for humans or difficult for them to understand.

“Previous AI successes, like when playing humans against chess, are based on black box systems where the human can’t understand how decisions are made. By contrast the white box uses logic and probabilities just like a human,” explains Stephen Muggleton, professor in the department of computing at Imperial College London.

Instead, NooK represents a “white box” or “neurosymbolic” approach. Rather than learning by playing billions of rounds of a game (with traditional deep learning), the AI first learns the rules of the game, then improves its game through practice.

It is a hybrid system based on rules and deep learning. “White box machine learning is closely related to the way we humans learn incrementally as we carry out everyday tasks,” says Stephen Muggleton.

Even if a person or AI cannot explain in words what it is doing, its behaviour must be “readable” to others, i.e., it must apply rules that they understand.

This “white box” approach will be critical in fields such as healthcare and engineering. Autonomous cars negotiating a junction will need to be able to read the behaviour of others, react and maybe even explain.

For the moment, there is still a lot to be learned by artificial intelligence, which does not understand, for example, the principle of bidding, part of the bridge game and an essential element for deepening communication, nor does it understand lying. — ETX Studio